PATHCAD team demonstrate sensor development for autonomous vehicles at the British Motor Museum

What if it rains? Indeed, what if it snows or the mist rolls in? Will your autonomous vehicle still be able to guide you safely to your destination? These are the central questions at the heart of the PATHCAD (Pervasive low-TeraHz and Video Sensing for Car Autonomy and Driver Assistance) EPSRC/JLR project conducted by Birmingham, Heriot-Watt and Edinburgh Universities together with the engineering team at Jaguar Land Rover (JLR).

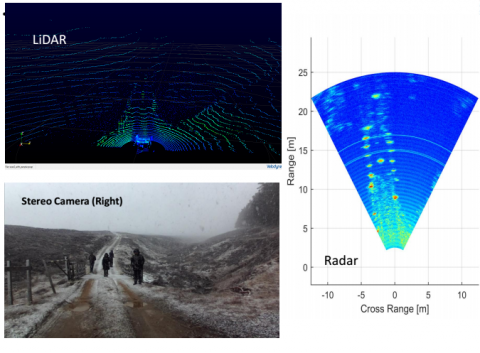

With the project now at its midpoint, the HWU team (Wallace, Mukerjee, de Moraes, Emambakhsh) joined the others at the British Motor Museum in early 2018 to explain and demonstrate the sensors and algorithms that process low THz Radar, Optical Stereo and LiDAR data acquired from a suite of sensors mounted on a Land Rover vehicle to map the landscape and recognise other ‘actors’ in the scene, such as pedestrians, cars, bicycles or even animals for on- and off-road scenarios.

As Professor Wallace explained, there is currently no single sensor that is applicable in all conditions. For example, video cameras are cheap and there are a lot of legacy algorithms and software that can do the job to a large extent, although accuracy of distance location is generally poor. In mist and fog, the road ahead disappears from view. LiDAR gives good spatial resolution, but can be complex and expensive, and as an optical signal can also degrade rapidly in bad weather. On the other hand, Radar sensors are relatively unaffected by the weather conditions, but give poor azimuth and elevation resolution, so it’s hard to tell the difference between an elephant and a post box. (Ok, so that is an exaggeration, but object discrimination is much harder than in video images!)

At the Sharefair event, organised by Jaguar Land Rover and held in the British Motor Museum in Gaydon, the team demonstrated some of the potential solutions to the above problems. First, new low THz radar technology coupled with synthetic aperture and time difference of arrival techniques can improve markedly the radar spatial resolution. Selective full waveform processing of Lidar can, potentially, find the actor through obscuring weather by selective depth filtering. Statistical and neural network solutions can be used to perform sensor fusion for scene mapping and actor recognition in all sensor modes, and we are looking at how information may be shared or “transferred” between the different modes in a supervised learning paradigm.

Feedback at the fair was very positive, and members of the audience also raised issues of power consumption, in particular how sensors and algorithms can be tailored to the new energy efficient electric cars that should be coming off the production line in the near future. Hopefully, we can continue to address these related problems.